Our AI System Wins VC Track at Stanford Law School Hackathon

For over 4,400 years, the fundamental way we use written contracts has remained largely unchanged. From ancient agreements on clay tablets to current contracts in PDFs, humans have always read contractual rules written in natural language and applied them to specific situations. However, for today's portfolios of increasingly complex contracts, this traditional approach is becoming increasingly ineffective.

Problem

Due to increasingly complex contracts that challenge human cognitive abilities, insurers are facing rising costs and risks. An executive at a well known insurer described an incident where shareholders pressured executives to properly manage undetected silent cyber security risks in portfolios of property insurance contracts with business interruption coverage.

A data scientist at a well known insurer described an incident where a claims adjuster discovered a one-word error in an aviation insurance policy that doubled their risk exposure.

A data scientist at a well known insurer described an incident where a claims adjuster discovered a one-word error in an aviation insurance policy that doubled their risk exposure.

Solution

Because computers can now understand natural language and process linguistic logic, Hammurabi developed AI systems for insurance companies to automatically, reliably, and cost-effectively understand their contracts.

Our software was designed to apply the logic contained in a contract to a scenario. So, you can use it to automatically understand how contracts behave under various situations to understand potential risks, obligations, and outcomes.

Husein and I (Team Hammurabi). Husein has a background in law and I have a background in ML.

Our Winning System

At this hackathon, Husein and I (Team Hammurabi) demonstrated an early iteration of an AI system that helps insurance companies automatically reason about a variety of contracts. The system was designed to process a contract’s logic, allowing users to determine what happens under the terms of a contract in a given scenario. Unlike advanced language models at the time, this system iteratively reflected on the scenario and contract logic, asking clarifying questions to avoid unfounded assumptions and improve accuracy before reaching a final conclusion.

Hackathon

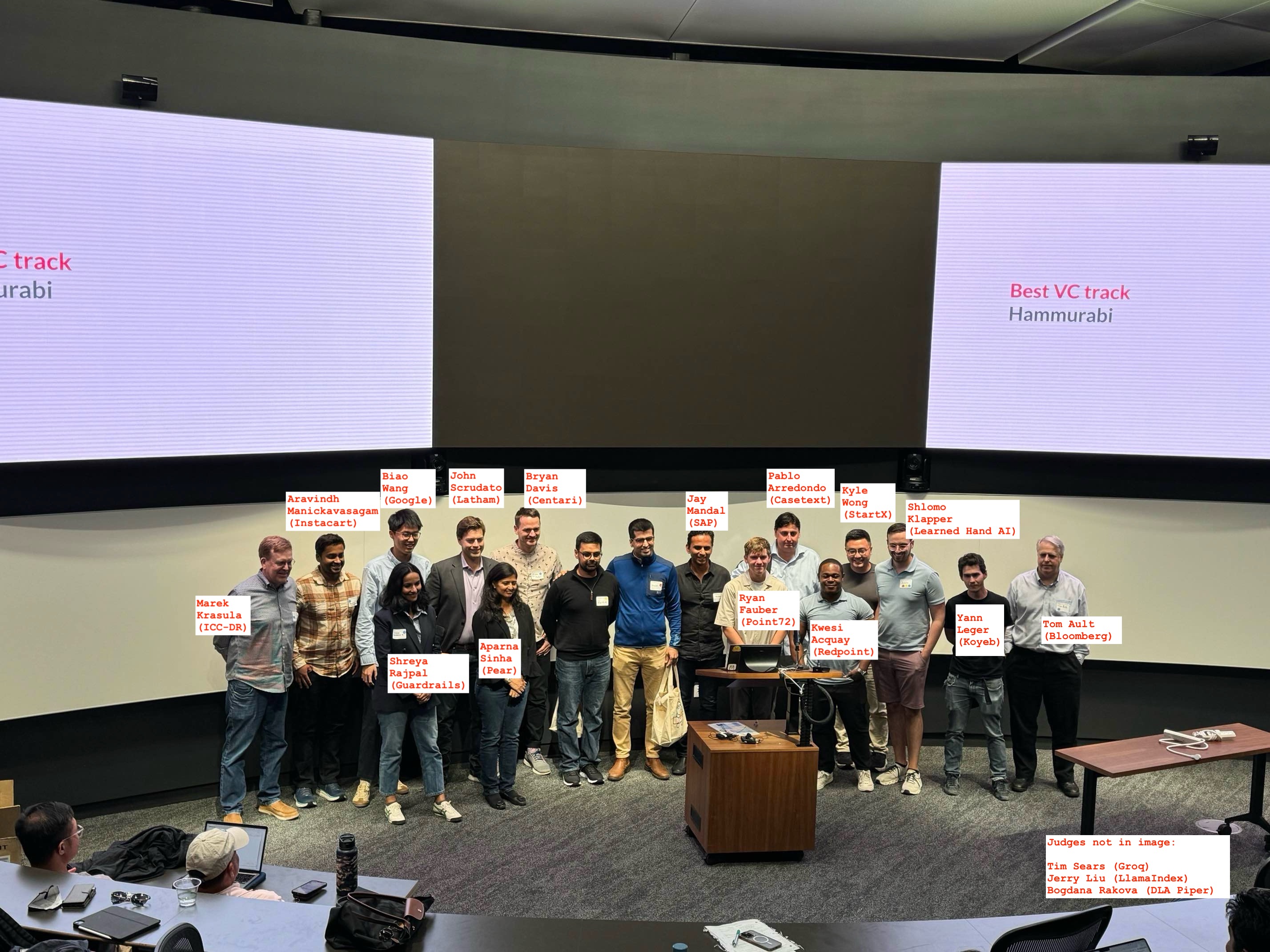

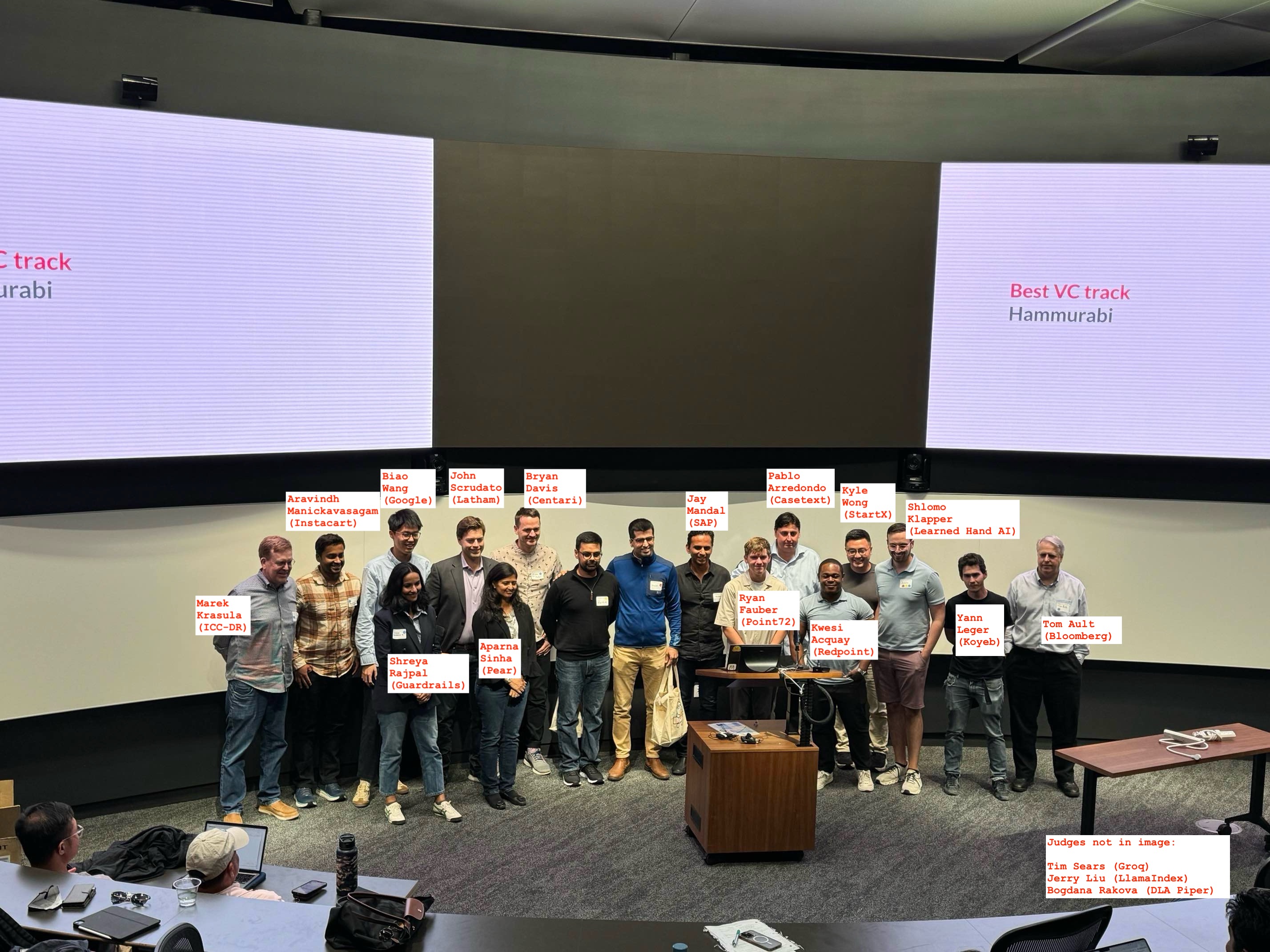

There were about 25 teams that participated in the hackathon. Teams composition included both industry professionals and students. Husein and I were fortunate to win the VC Track at the hackathon. Some of the judges included: Pablo Arredondo(Founder of Casetext), Apanha Sinha (SVP at Capital One), Kwesi Acquay (partner at Redpoint Ventures), and many more as listed in the picture below.

Husein and I posing for a picture with the hackathon judges

Takeaways

There is growing excitement around the use of Large Language Models (LLMs) in the legal field, largely due to the high cost of legal services. LLMs have the potential to significantly reduce these costs, benefiting both individual users and large organizations. In fact, most people in the United States cannot afford legal representation, which creates a substantial access-to-justice gap. Given LLMs’ capabilities with complex documents, this technology could dramatically improve quality of life by making legal support more accessible. However, there are still critical challenges that need to be addressed before widespread adoption can occur:

- Interpretability of LLM outputs: It is often difficult to understand how an LLM arrives at a particular response, making it hard for users to trust or validate the results. Improving the transparency of these systems is essential to ensure their safe use in legal contexts.

- Provability of LLM outputs: Legal and administrative systems often require verifiable and consistent reasoning, such as when interpreting healthcare insurance policies. We must be able to prove that the claims made by the LLM are correct and provable.

- Legal liability in case of LLM errors: If an LLM provides incorrect legal advice or misinterprets a statute, it's unclear who would be held responsible for the resulting consequences. Application developer or LLM model provider?

I would love to hear your thoughts in the comments below.